The Betrayed Promise: When Artificial Intelligence is a reflection of Our Prejudices

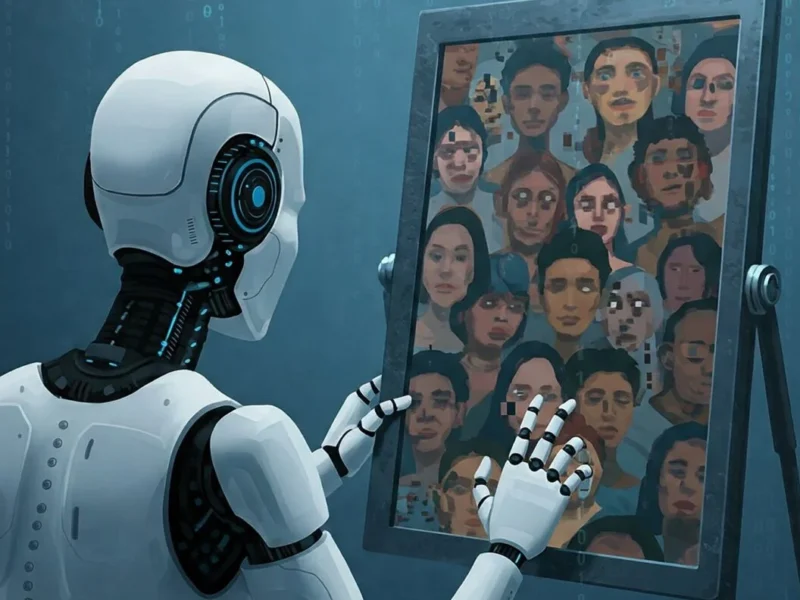

Artificial intelligence (AI) has often been celebrated as a revolutionary force, is capable of delivering us from the prejudices and limitations of the human. The idea that algorithms, cold, mathematical equations, able to take decisions in a more rational and objective than us, it was tempting.

But the reality, unfortunately, is proving to be more complex. The IA, far from being a panacea, it can become a mirror distorted by our own imperfections, reflecting and amplifying the bias that still permeate our society.

The Defect in the Original: As the Data they Teach Prejudice to the Machines

Machine Learning and Its Limits

To understand this phenomenon, we must start from the way in which machines “learn”. The algorithms are not born with an innate capacity for judgment; acquire knowledge and skills by analyzing huge amounts of data. Here is where the problem originates.

If the data that we provide to the IA reflect the inequalities, historical, cultural stereotypes or implicit biases, it is inevitable that the decisions of the IA will be affected. This mechanism is at the basis of the so-called algorithmic bias.

Concrete examples of Discrimination in Algorithmic

In the recruiting automated: An AI system for the selection of personnel, trained on data that show a predominance of men in leadership positions, may learn to consider the “ideal” profile of the male, than involuntarily and the women candidates. According to a a study published in the Harvard Business Reviewthese systems can perpetuate gender discrimination, even when the gender is not explicitly included in the parameters of the evaluation.

In face recognition: A software trained mostly on images of people with fair skin may have difficulty accurately identify the faces of people with darker skin. The research conducted by Joy Buolamwini of the MIT it has been shown that some commercial systems have error rates up to 34% higher for women with dark skin.

In justice predictive: As we analyzed in our article on Digital Justice, the algorithms used to evaluate the risk of recurrence show bias systematic against ethnic minorities.

These are not hypothetical scenarios, but concrete examples of how the AI, even without the intent of malicious, can perpetuate discrimination.

The Many Faces of Bias in Algorithmic

Types of Bias in AI

The problem of AI bias is multi-faceted and it manifests itself in different ways:

Bias in history: When the training data reflect the injustices of the past Bias of representation: When some groups are under-represented in the dataset Bias confirmation: When the algorithms reinforce the prejudices that exist Bias measurement: When the metrics used to favour certain groups

Beyond the Data: The Role of the Human Bias

It is not only a question of the data is “dirty”. Also the design of the algorithms, the choices of development and modes of use may introduce distortions, as shown in our analysis ofethics of artificial intelligence.

Sometimes, the bias are obvious, such as when a system excludes a group of people. But often, the bias are more subtle and difficult-to-locate, taking root in the metrics that we measure, in the parameters that we set, or even in the way in which we interpret the results.

The Social Impact of Bias in Algorithmic

The Practical consequences in Society

AI bias is not only a theoretical problem. Has tangible consequences that affect the lives of millions of people:

- Discrimination in access to credit: Algorithms banks penalize systematically some of the community

- Inequalities in health care: Systems of AI that underestimate the medical needs of certain demographic groups

- The perpetuation of educational inequality: As explored in our article onAI in education

The Vicious Circle of Discrimination

The bias algorithmic may create a vicious circle: the decisions discriminatory AI affect the reality, generating new data that are distorted, which in turn feed the algorithms even more discriminatory.

Towards a IA Right: Strategies and Solutions

Technical approaches to Mitigate Bias

Diversification of the dataset: To ensure fair representation of all groups Algorithms of fairness: The development of models that optimize explicitly for fairness Auditing algorithms: Systematic testing to identify bias hidden Interpretability: As discussed in our article on the algorithmic bias, it is essential to make the algorithms explained

The Role of Governance and Regulation

TheThe European Union has proposed TO Act, the first regulation on AI in the world, which includes specific provisions against discrimination algorithms.

A New Pact Between Humans and Machines

Shared Responsibility

The fight against THE bias requires a collective effort that involves:

- Developers: Implementing fairness by design

- Companies: Regular audits and transparency

- Legislators: Appropriate regulatory

- Civil society: Monitoring and advocacy

Guiding principles for AI Ethics

As we detail in our guide to the ethics of AIthe fundamental principles include:

- Transparency and spiegabilità

- Human responsibility

- Fairness and non-discrimination

- Privacy and human dignity

FAQ: frequently Asked Questions about Bias in Algorithmic

What, exactly, is the bias in algorithmic? The bias algorithmic is the systematic trend of an algorithm to produce results in a discriminatory or unjust towards certain groups of people, often reflecting the biases present in the training data, or in the design decisions.

How can I know if an algorithm is biased? Some signs include: differences in the results between different demographic groups, the lack of transparency on the decision-making criteria, and performance to be significantly different for different categories of users.

You can completely eliminate the bias from the AI? Completely eliminate any form of bias is extremely difficult, but it is possible to reduce it significantly through the design conscious, the diversification of data, rigorous testing and continuous monitoring.

Who is responsible when an algorithm discriminates? The responsibility is often shared between developers, companies that implement the system, and the institutions that use it. The clear assignment of responsibility is one of the central themes of the regulation emerging.

How it affects the bias algorithmic daily life? The bias can affect job opportunities, access to credit, medical diagnoses, recommendations, education, and many other aspects of everyday life, often in ways that are invisible to the users.

Conclusion: The Future of AI Depends on Our Choices

The artificial intelligence has the potential to radically improve our lives, but this potential will not happen automatically. As highlighted in our reflections on surveillance and IA, we need to be vigilant about the risks as we work to maximize the benefits.

We must forge a new covenant between man and machine, based on transparency, responsibility, and awareness. A covenant in which we recognize the limits of AI as a tool and we are always at the center of the fundamental human values: fairness, justice and dignity.

The future of AI in the right depends on the choices we make today. Each algorithm is designed, each dataset taken care of, every decision implementation is an opportunity to build a more equitable world, or to perpetuate the injustices that exist.

The challenge is great, but so is the opportunity to create technologies that truly serve the whole of humanity.