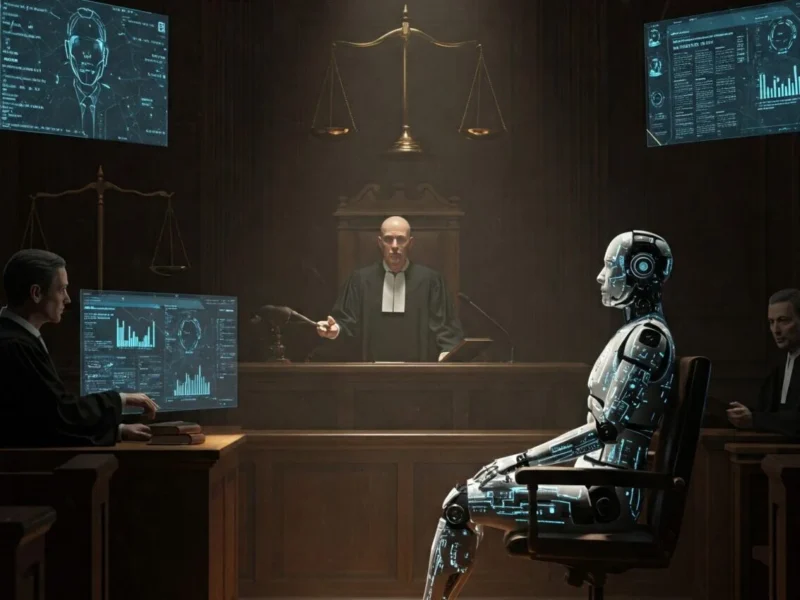

Justice automated efficiency or illusion?

The idea of a justice system more efficient, neutral and objective, which is entrusted to the mathematical logic of artificial intelligence, has an undoubted charms. Imagine the courts able to analyze massive amounts of data in just a few seconds, to recognize patterns that are invisible to humans, and produce quick decisions, consistent, perhaps free from bias, emotional.

A system in which the scales of justice penda, finally, to a true impartiality.

But is this indeed the promise of AI as applied to the law? Or we run the risk of confusing efficiency with equity, and to introduce new forms of injustice, invisible because masked by the apparent objectivity?

The advantages of artificial intelligence in the legal field

The enthusiasm is understandable. The predictive systems based on IA offer many potential advantages:

- Assessment of the risk of recurrence

- Analysis of the case-law on a large scale

- Editorial assistant legal documents

- Acceleration of the procedures and uniformity in decision-making

In theory, this could lead to a judicial system more quickly, consistently and economically. The AI can discover connections in your data beyond even to the lawyers and experts.

Bias in algorithmic: the dark heart of justice predictive

However, behind this vision hide eerie shadows. The IA work only thanks to the data on which they are trained. And if these data are a reflection of the inequalities, discriminatory practices or biased historians, the algorithm will repeat them.

This phenomenon is called the bias algorithmic. It's not a bug, but an intrinsic characteristic of each IA mal-nourished.

Example: if the historical data on the offences to reflect more stringent controls on certain ethnic groups, the algorithm may classify the same groups as the “most at risk” – even if the reality is more complex.

👉 The IA Unjust: Bias and Discrimination in the Data

👉 AI Now 2018 Report – Fairness in the Criminal Justice

The danger of the algorithm inhuman

The biggest risk is not only the statistical error. Is the loss of humanity in the judgment.

An algorithm does not know the social context, the personal history, the extenuating circumstances. Can't feel empathy, or grasp the nuances of morality. Reduce the people to a numeric variable means to transform the judgment in the calculation.

Such a system, for efficient, is likely to be profoundly inhuman.

👉 IA and Surveillance: Who is controlling who?

How to make an AI that is compatible with the justice

This is not to demonize the technology. The AI can really improve the judicial system, but only if:

- the data are clean, fair and representative

- the algorithms are transparent and explicable

- there is always a supervisory human active

- there are mechanisms to correct errors and challenge decisions

Need a governance ethics able to combine legal knowledge, technology and the humanities.

👉 Ethics of Artificial Intelligence: Why it concerns us all

👉 BETWEEN – Artificial Intelligence and Fundamental Rights

A multi-disciplinary challenge, human and political

The future of digital justice requires an open discussion between:

- developers and computer

- judges, attorneys, lawyers

- philosophers, ethicists, sociologists

- citizens and associations for the rights

The goal is not only to integrate the technology. Is to build a system that is more fair, transparent, and human, in which the AI is a tool in the service of justice — not a mechanism that amplifies the weaknesses.

👉 AI and Democracy: Algorithms and Electoral Processes

The real question

The real question is not: “We can use AI in the courts?”

But: “How do we do that without losing our idea of justice?”